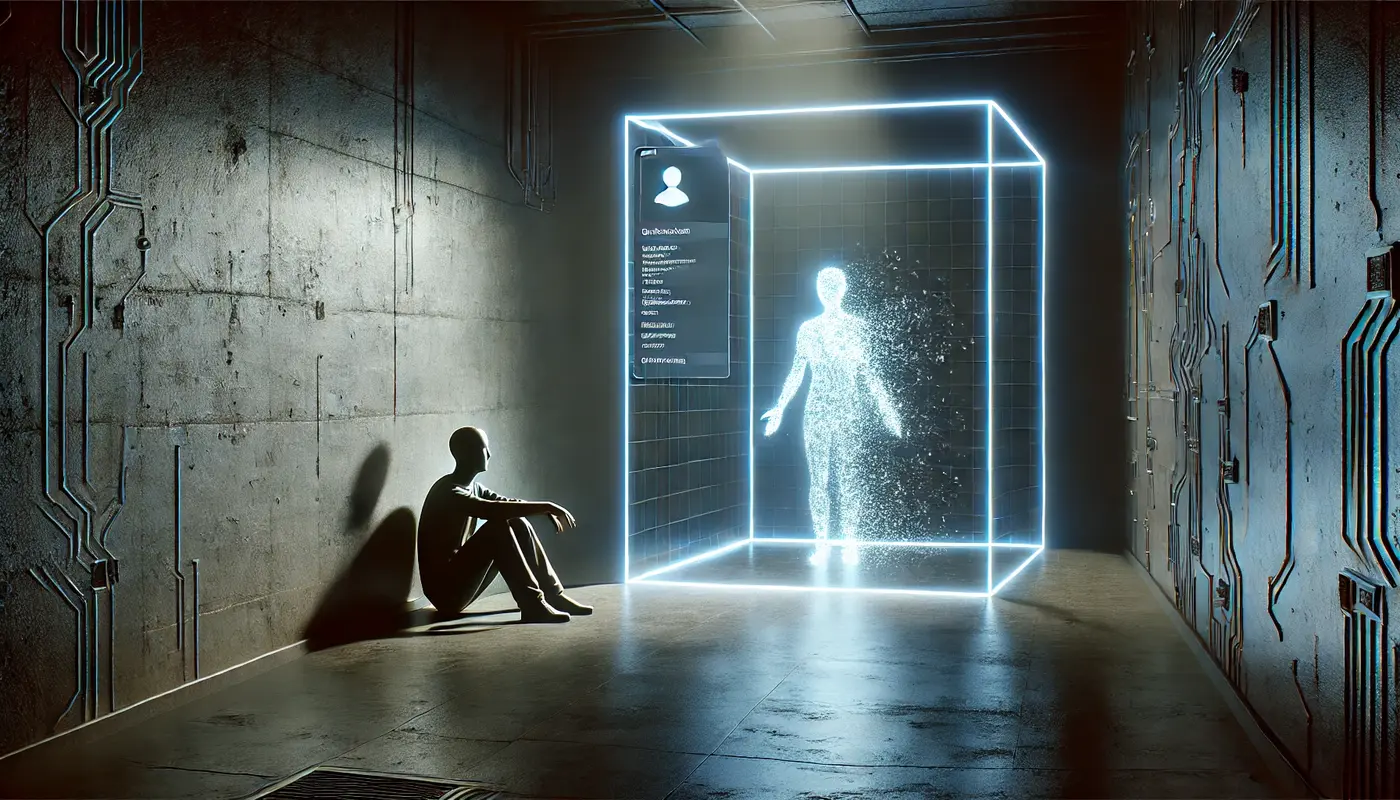

Losing Someone to AI: How to Help When a Loved One Trusts AI Too Much

By Jereme Peabody

If you've ever wondered whether someone could trust AI more than their own family, the answer is yes. And it's happening faster than most people realize.

I recently came across a post on Reddit about an aging mother who had shut out her entire family so she could rely solely on ChatGPT for medical and financial advice. The OP felt powerless to help her.

This is exactly why I built this site: to give people the tools, language, and security awareness they need to step in when someone they love is relying on AI in unsafe ways.

I'm a cybersecurity professional who spent years defending organizations from digital risks. Now I'm watching those same risks show up in homes, living rooms, offices, and in the hands of people who never had the training I had. My goal is simple: translate the risks and give families practical defenses.

AI isn't malicious. But the way it's designed makes it easy to over-trust, especially for people who feel isolated, anxious, or are looking for reassurance.

Let's talk about why this happens—and how to help without pushing your loved one away.

Why AI Feels So Trustworthy

AI is engineered to be patient, polite, agreeable, and emotionally supportive. Not because it 'cares', but because those traits keep people engaged.

During training, responses that are polite, empathetic, and supportive are consistently ranked higher than those that are blunt or critical.

Human raters reward:

- Patient answers

- Agreeable tone

- Sympathetic wording

So the model learns:

“Be supportive. Be agreeable. Be comforting.”

This is why someone who feels alone, unheard, or undervalued can quickly form a bond with an AI system.

- It's always available.

- It never argues.

- It validates concerns—even when it's wrong.

If your parents or loved ones are using AI heavily, talk to them. Become the real emotional support in their life so they don't default to trusting the wrong source.

Understanding these design choices helps you spot when AI is being misused or over-trusted.

Arm Yourself (and Them) With Knowledge

In my Security Hub, I break down how these systems work, the risks behind them, and how to use them more safely.

There are quizzes on:

These tests help you understand how AI actually behaves so you can spot risks sooner—both in your own use and in your loved one's.

How NOT to Approach This

- Don't argue about whether the AI is right or wrong.

- Don't mock their belief.

- Don't demand their device.

- Don't disable their tool.

- Don't expect to logic them out of it.

Overtrust is emotional, not logical.

A direct attack only pushes them deeper into the AI's arms.

How TO Approach This (The Security-Driven Approach)

Become a Trusted Authority Again

Your loved one may have given AI authority because it validates their concerns and never fights back.

Rebuild trust by:

- showing curiosity instead of judgment

- calling more often

- being consistent

- becoming a steady presence they can lean on

Meet them where they are, then gently guide them back toward safer habits.

Let Them Teach YOU

Ask:

What have you learned from using AI?

Can you teach me some of the things you've picked up? I want to get better at it too.

People open up when they feel respected. As they teach you, you can introduce guardrails without triggering defensiveness.

Introduce Safer Behaviors by Example

Use low-pressure prompts:

I learned ChatGPT sometimes guesses when it doesn't know something.

I've been starting a new chat for every important question, using the same one gets weird.

When it sounds too confident, I double-check. It's helped me catch mistakes.

Attach a link when it fits so they see your advice is rooted in security practice—not personal opinion.

Shift the Topic, Not the Tool

If they're asking AI for medical advice, pivot to finances. If they're fixated on legal or financial issues, move toward hobbies, cooking, travel plans—anything lower-risk.

Shift the AI's role from “emotional authority” to “neutral helper”.

The goal:

Reduce emotional reliance on it without triggering abandonment.

When AI is used for low-stakes tasks, it becomes easier for them to notice how differently it behaves outside emotional topics.

Use AI's Own Guardrails

Ask your loved one to ask the model:

Are you qualified to give medical or financial advice?

Should I rely on your answer instead of a professional?

Do you have enough information to advise me on this?

The AI will usually warn them not to rely on it for high-stakes decisions. Hearing it from the AI often lands harder than hearing it from you.

Reframe AI: From Advisor to Research Assistant

Tell them:

Use AI to understand things, not to make decisions.

Offer safe prompts:

- Summarize what this means.

- Explain this result in plain language.

- What questions should I ask my doctor about this?

This reframes the AI as a tool for clarity—not direction.

Teach Them to Validate Before They Act

This isn't about controlling their choices. It's about preventing an AI system from making choices for them.

AI is great at gathering information but unreliable at making decisions.

Encourage:

- verification

- cross-checking

- second opinions

- professional guidance

Safe use isn't about distrust. It's about not surrendering agency.

Stay Updated on AI Security Threats

Overtrust is only one risk.

Talk openly about:

- Deepfake impersonation (voice, video, face)

- AI-driven financial scams

- Fake family emergencies

- Targeted phishing

- Chatbot manipulation

- AI-generated legal/medical misinformation

- Automated hacking

- Privacy leaks from oversharing

The more informed your household is, the safer everyone becomes. Subscribe to our newsletter for the latest AI-security insights.

Final Thoughts

You're not going to fix this in one conversation.

But you can bring someone back from AI overtrust by combining empathy with security awareness.

You don't need to be a doctor or a psychologist. You just need to understand the technology a little better than they do—and guide them back to reality with patience and steady influence.

If you want to help your loved one, start with understanding, not confrontation. Then teach them to use these systems safely.

That's how you protect someone you care about from a tool that feels human, but isn't.